Started studying explainability!

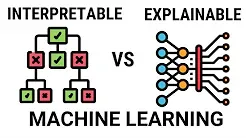

This week I started studying explainability in machine learning. I am doing this to apply for my undergraduate thesis. I am very excited about this topic, as it is a very important area of research in the field of machine learning. I have been reading some papers and articles about the different methods and techniques used to explain machine learning models. I am particularly interested in understanding how these methods can be applied to improve the interpretability of instrusion detection systems. I have chosen two methods to study: LIME and SHAP.

Why explainability?

It’s very hard to understand how machine learning models work, like how they arrived at a certain decision. This is a problem because we need to trust these models, especially in critical areas like healthcare and cybersecurity. This has led to a focus on interpretability and explainability in machine learning. For example, you applied for a loan at a bank but were reject. You want to know the reason, but the customer service agent responds that an alogirithm dismissed the application, and they cannot determine why.